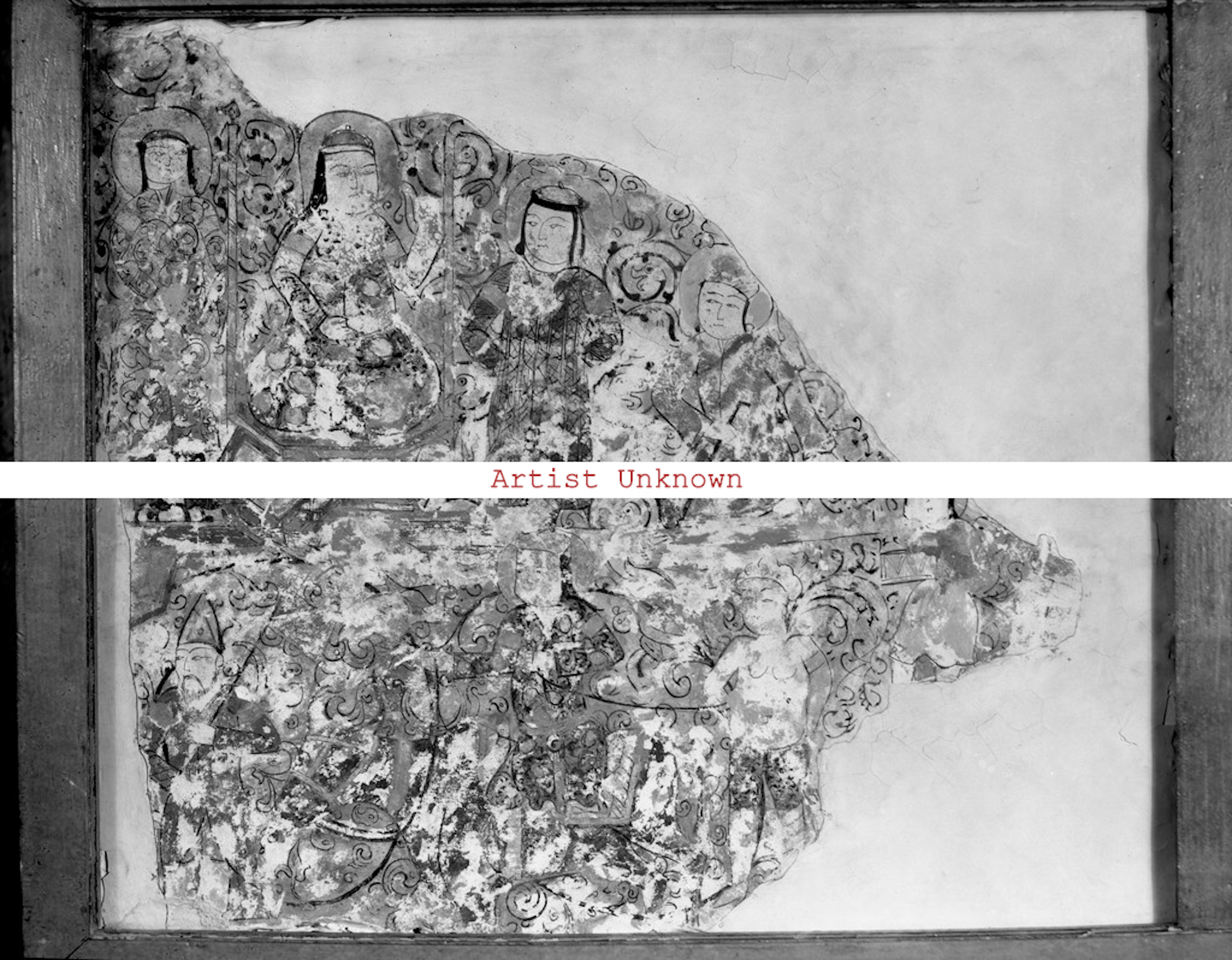

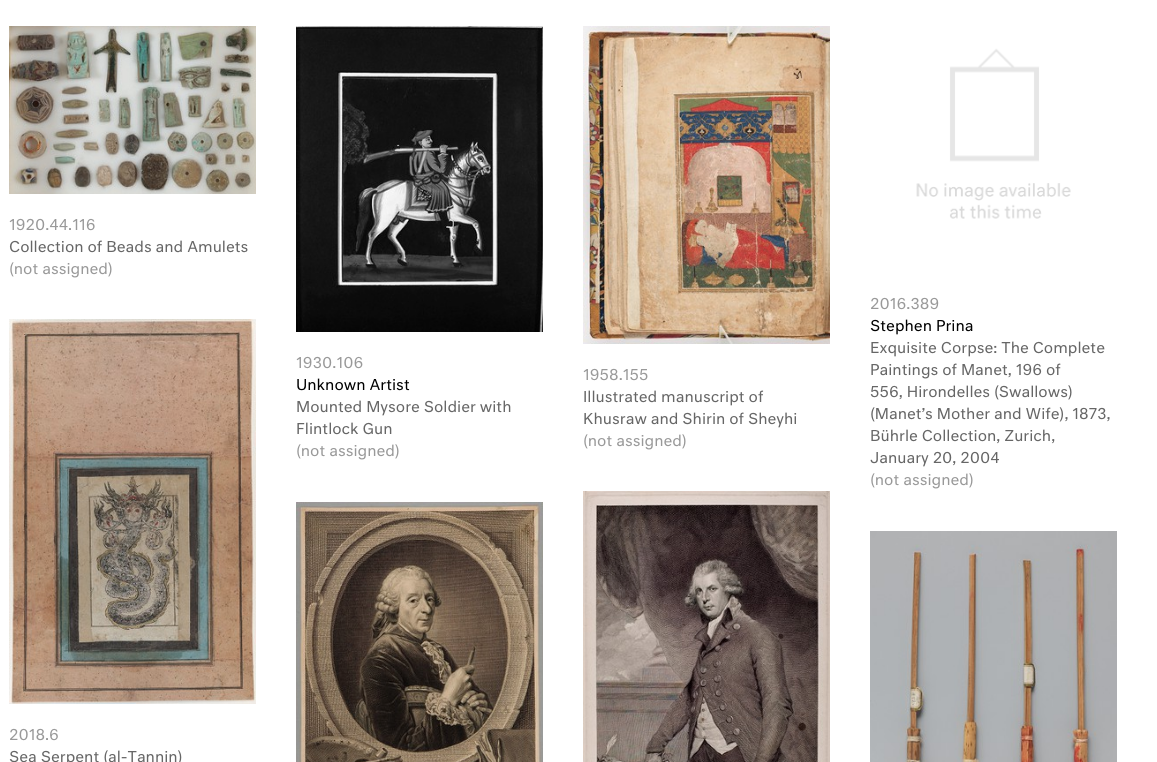

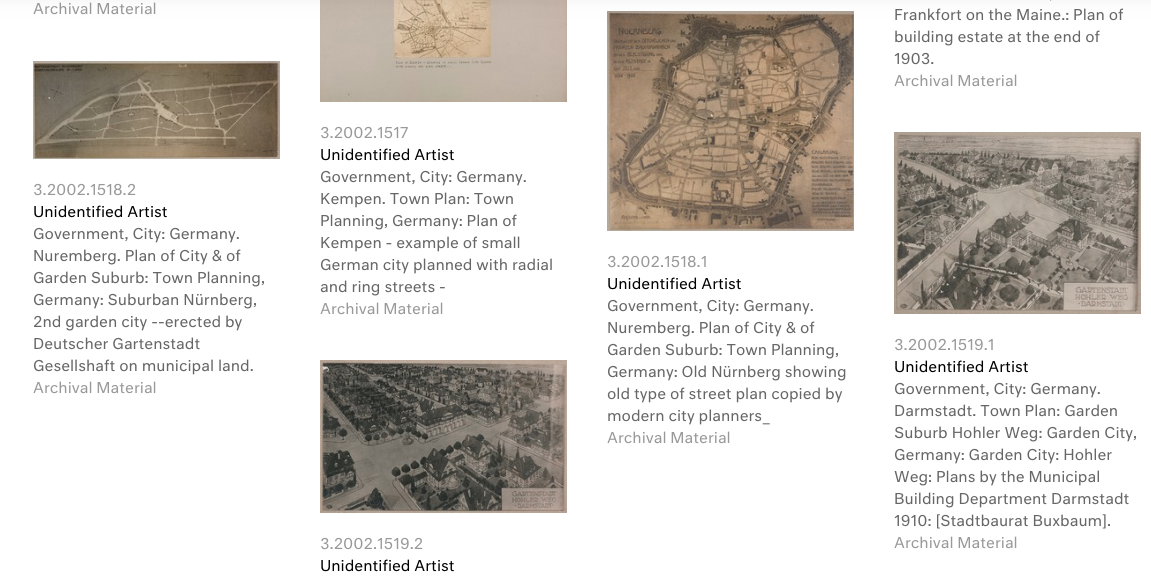

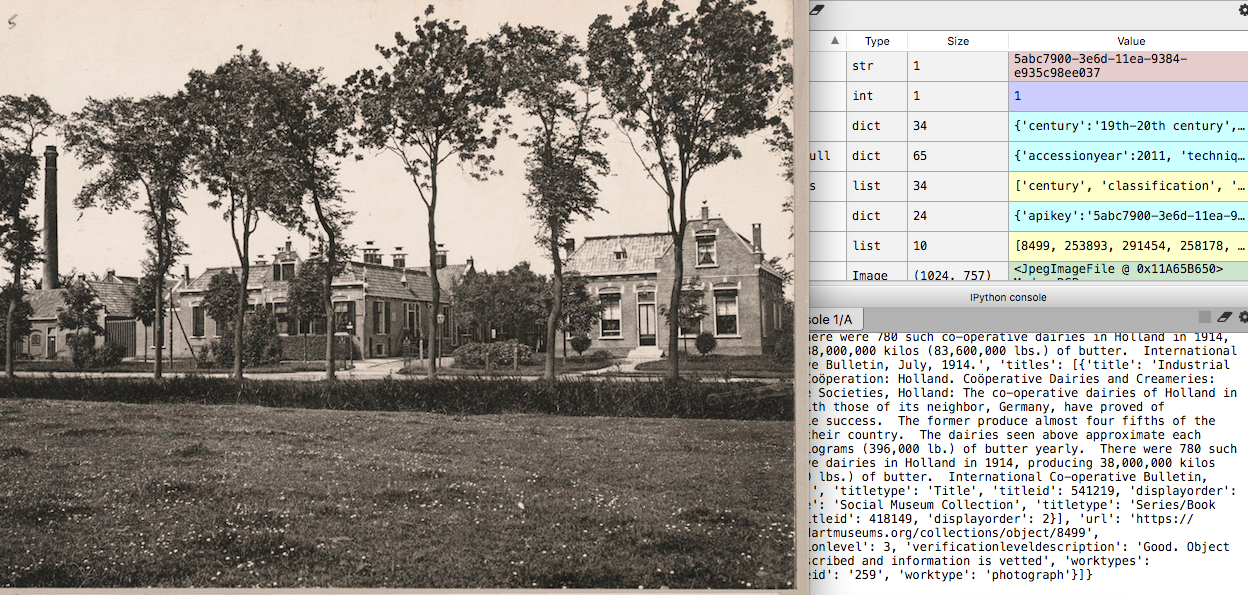

Online recommendation systems are information filtering systems that provide users with streams of prioritized content based on expected individual preferences. While they can be of different types – collaborative, content-based, or hybrid filtering -, they typically share the use of machine learning technologies as forms of artificial intelligence able to perform predictions and profile personal taste. Drawing upon previous research on critical algorithm studies, "This Recommendation System is Broken" is a computational art project that tackles the limitations of predictive content personalization and automated sorting.

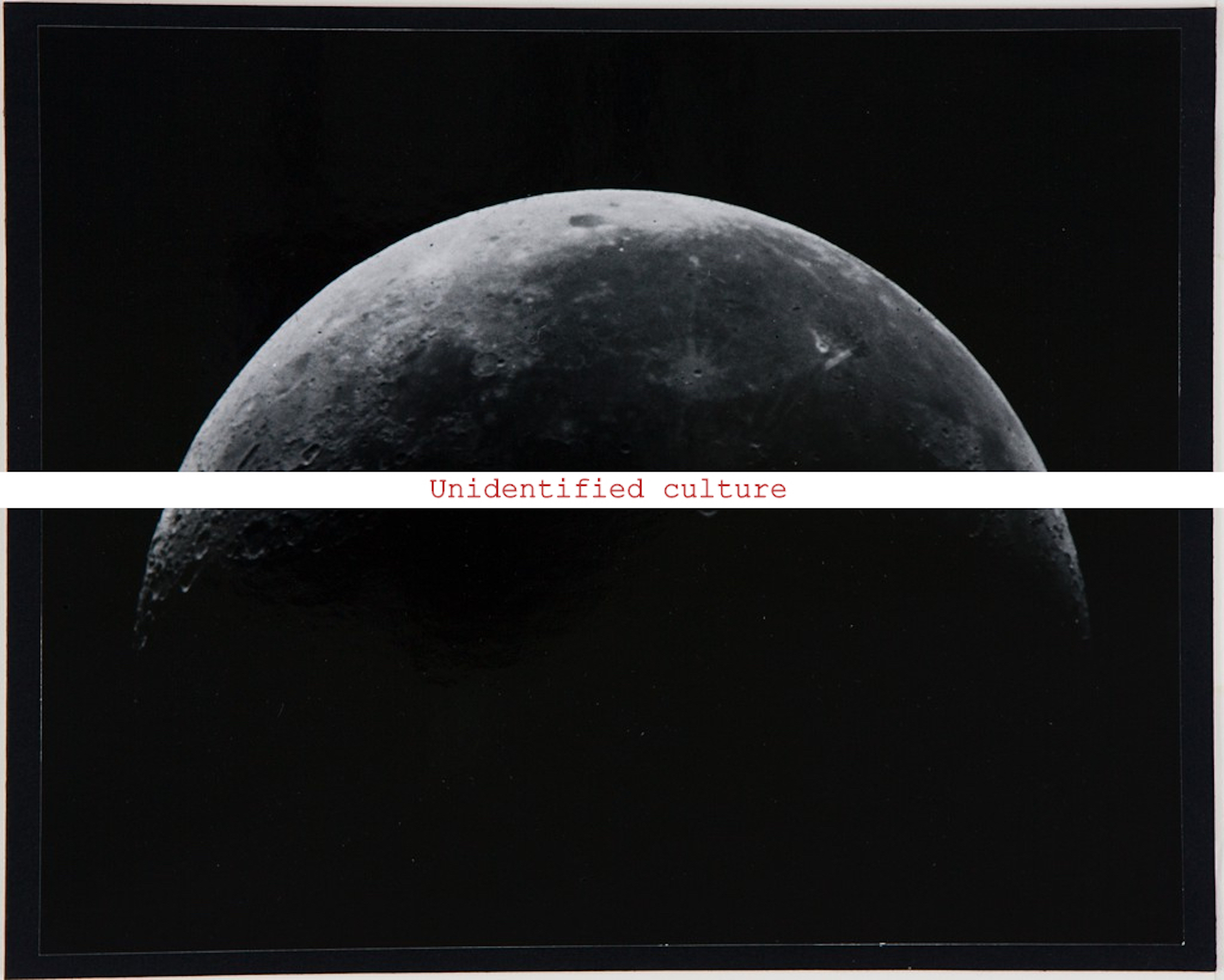

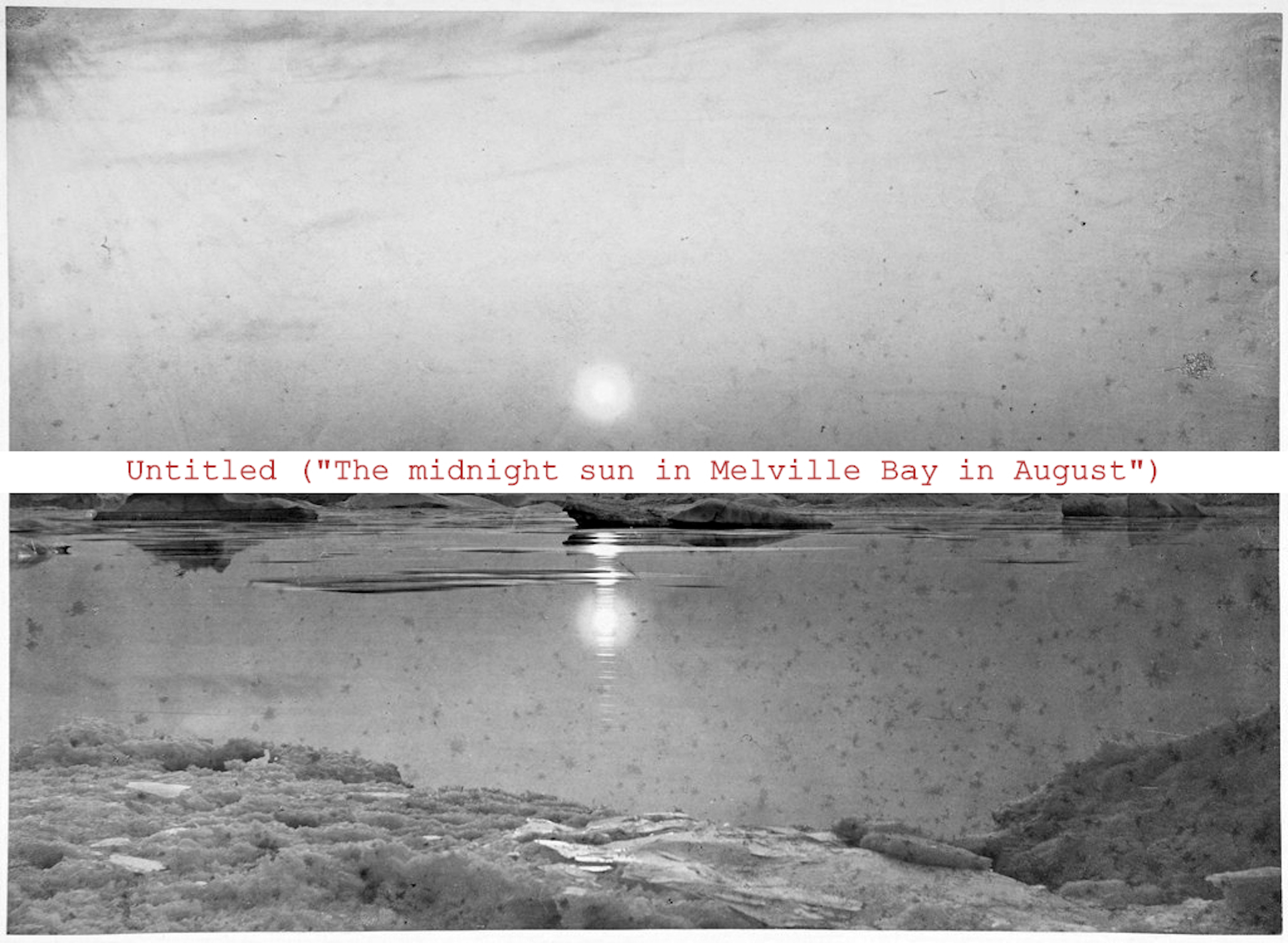

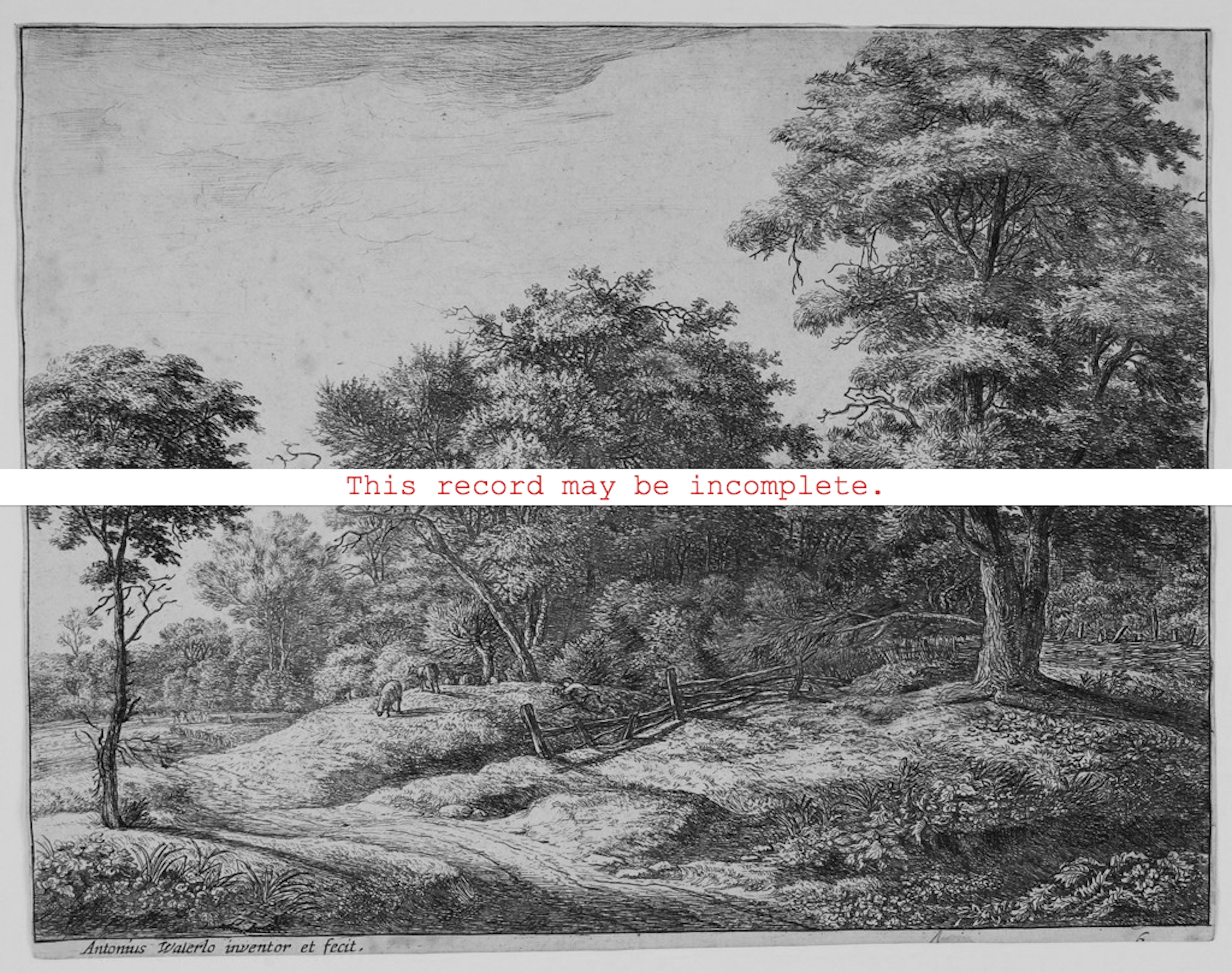

By using creative coding and guided randomization, this project upends the expectations of carefully-automated choice, surfacing suggestions from the vast body of undervalued, hidden, unseen artworks in the museum collection. "This Recommendation System is Broken" ultimately challenges the public to rethink museum collections in terms of visible and in-visible objects, by inviting us to explore what we might call "brokenness" in recommendation systems and to reconsider our understanding of marginalized art history.